The hardest part of modern school safety is not the lack of information; it is the flood of it. Threats surface through texts, social media posts, rumors in hallways, and sometimes through real weapons on camera. That’s why more schools are turning to AI to help them identify what matters and respond on time.

The challenge is that many of the threats schools receive turn out not to be credible. For example, research on specific categories like school bomb threats shows that up to 90 percent are ultimately classified as hoaxes, while broader studies of school violence incidents suggest that nearly 30 percent involve false reports. Every false alarm diverts staff and first responders from other priorities, making it harder to identify the situations that truly matter. Yet the most crucial question remains, whether the system will detect the real incident on the day it truly matters.

Families, educators, and safety leaders are justified in being cautious. AI can support safety, but only if it improves accuracy rather than introduces new uncertainty. A recent incident in Maryland showed what happens when that balance is off.

How an AI False Alarm Escalated Into a Dangerous School Incident

In Baltimore County, a 16-year-old student was detained at gunpoint after an AI system misidentified a crumpled Doritos bag as a firearm. He had finished his snack after school and placed the wrapper in his pocket. Minutes later, several police cars arrived. Officers ordered him to the ground, handcuffed him, and searched him.

When they finally showed him the image that triggered the alert, it was not a weapon at all. It was the chip bag.

The student later said he wondered if he was going to be killed.

His fear was not irrational; it stemmed from a moment when an incorrect alert created unnecessary urgency, pressure, and stress.

This was not a case of human overreaction. It was a case of technology providing bad information at precisely the wrong time.

AI Weapon Detection Failures: False Positives and Missed Threats

The same AI system used in Maryland was also deployed at Antioch High School in Tennessee, where a student opened fire in the cafeteria earlier this year. Despite cameras capturing the incident, the AI did not detect the weapon.

One system produced a false positive, escalating a harmless moment. The other produced a false negative, failing to recognize a real threat.

When the same technology makes severe errors in both directions, it becomes clear that the issue is not isolated to one direction. It is systemic.

These tools were deployed in high-stakes environments without the validated accuracy needed to support the people who depend on them.

Why AI Accuracy Is the Single Most Important Requirement

The conversation about AI in schools should not start with features, automation, or cost. It should start with accuracy. Every decision that follows depends on it.

1. False positives can cause harm

A system that mistakes everyday objects for weapons introduces unnecessary danger. It forces staff, officers, and students into situations where tension is already high. Even if the mistake is discovered quickly, the emotional and psychological impact remains.

2. False negatives create a false sense of security

If a system fails to detect an actual threat, the school community may believe they are protected when, in fact, they are not. That is more than a technical gap. It affects how people plan, respond in a crisis, and judge their own level of risk.

3. Accuracy shapes trust

Parents want to know their children are safe. Teachers want to know they have reliable support. Officers want to respond to real threats, not false ones. When technology behaves inconsistently, trust erodes quickly, and rebuilding it is challenging.

Accuracy is not a performance metric. It is the baseline requirement for responsibility.

AI Accuracy Standards Schools Should Require From Safety Vendors

AI can support school safety, but only when it meets clear expectations. Districts evaluating AI video security solutions should insist on:

1. Independent, transparent accuracy validation

Schools should not solely rely on claims or controlled demonstrations. They need validated accuracy data drawn from their environment or one similar to theirs.

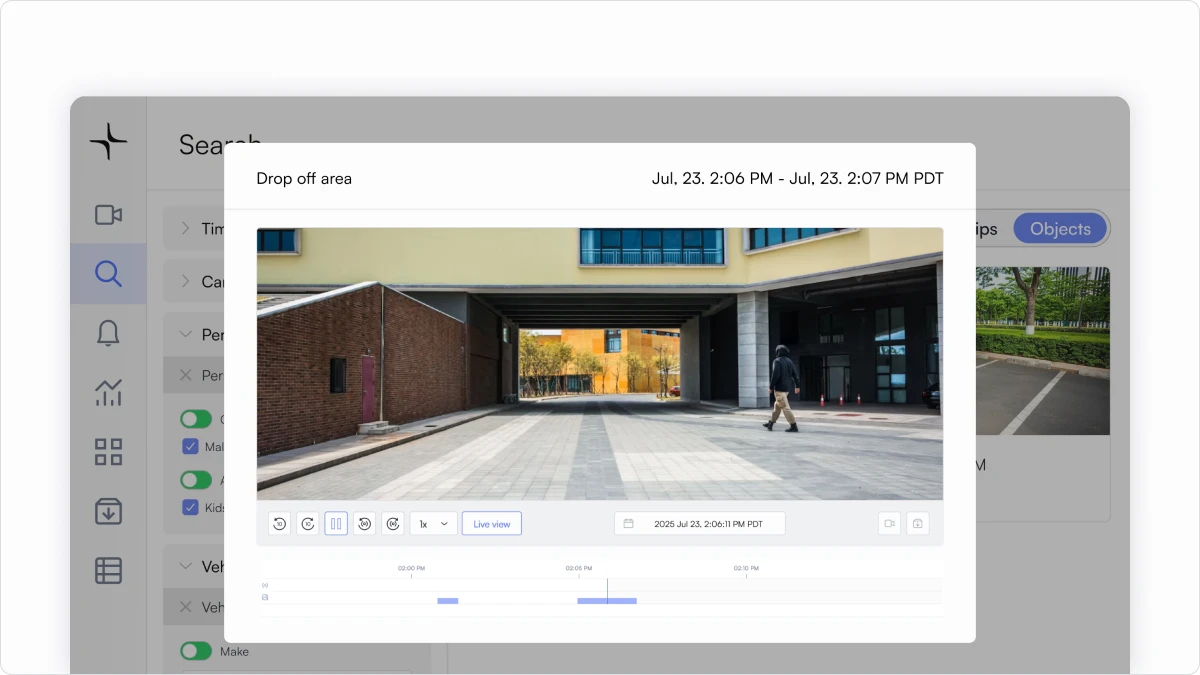

2. Contextual and behavior-based detection

Identification should not rely on a single frame or a single shape. AI needs to understand how a weapon is carried and used, so a holstered gun on a uniformed officer does not trigger the same alert as someone raising a gun toward others.

3. Human verification before escalation

When a situation could lead to a high-stakes response, human judgment must guide the next steps. AI should provide clarity, but people should make the call.

4. Reliable performance on all camera types

Accuracy should not require a closed, proprietary stack. Schools should be free to choose the right cameras without sacrificing detection quality.

5. Ongoing improvement

AI should continue learning from real-world examples. It should improve over time, rather than remaining stagnant.

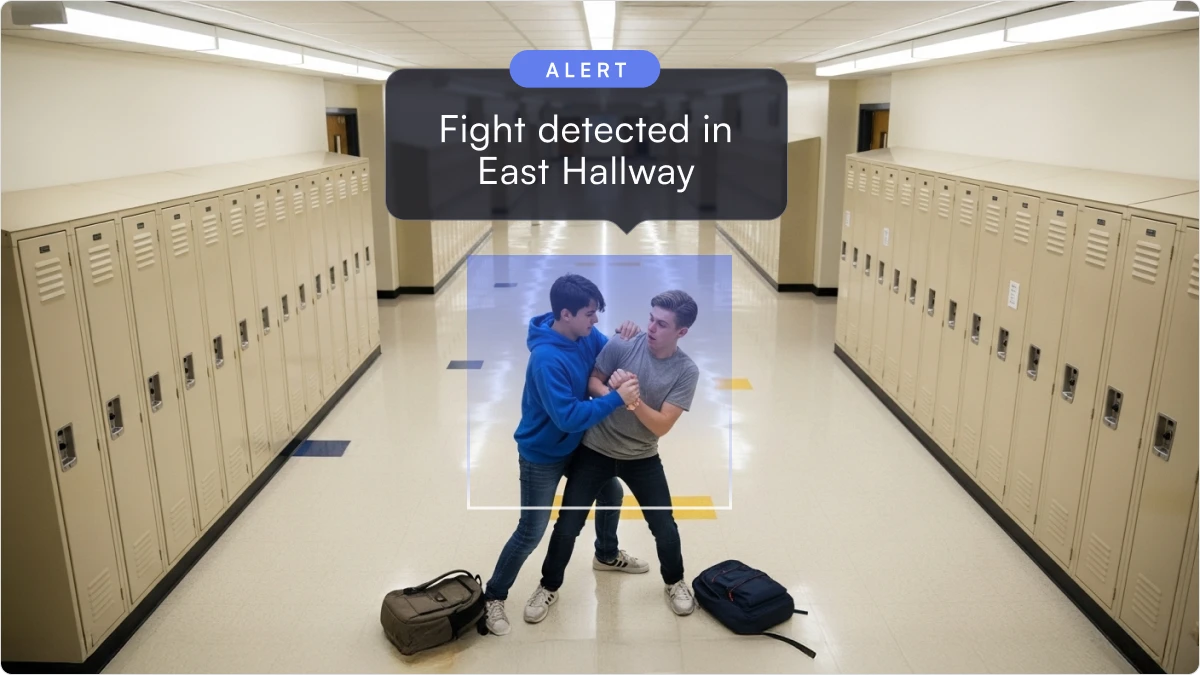

6. Alerts with full context

Schools should receive video clips that show what happened in context, not isolated fragments that can be misleading.

These standards help ensure that AI supports safety teams instead of overwhelming them.

How AI Can Support, Not Replace, School Safety Teams

AI is often misunderstood as a replacement for human decision-making. In reality, the best systems are designed to support skilled individuals by providing them with clearer information and more time to act.

Humans remain responsible for interpreting the situation, verifying what is happening, and choosing the appropriate response. AI helps by removing guesswork and reducing the noise that distracts teams from what matters.

When accuracy is high and transparency is built in, AI becomes a tool for confidence rather than a source of concern.

A Responsible Path Forward for AI in School Safety

The incidents in Maryland and Tennessee are a reminder that accuracy is not a minor detail. It is the factor that determines whether AI reduces harm or increases it. As AI becomes more prevalent in public spaces, communities deserve clarity about how these systems operate, how they are tested, and how reliably they perform under real-world conditions.

At Lumana, accuracy is not a marketing claim. It is the foundation of our entire platform. Our AI is trained on millions of real-world examples, validated across diverse camera types and environments, and continuously tested, ensuring that performance continually improves over time. We design our systems to recognize both objects and behaviors, which helps distinguish routine activities, such as a holstered weapon on an officer, from genuine threats. Every alert includes full context, allowing people to make informed decisions, and our detection quality is not dependent on purchasing proprietary cameras.

If you are evaluating solutions or planning upgrades, we would be glad to demonstrate how high-accuracy detection can support your safety goals and how to integrate AI into your environment in a way that builds confidence rather than concern.

Learn more by talking with our team: www.lumana.ai/get-demo

Related Articles

Security infrastructure

Feb 20, 2026

Smart Event Alerts Explained: From Detection to Action

Company

Feb 18, 2026

Innovation Meets Responsibility: Why We’re Setting a New Standard with Lumana’s AI Policy

Security management

Feb 16, 2026